Table of Contents

- 1. What is Kuali Rice?

- 2. Software Architecture

- 3. Technical Overview

- 4. Installation Steps

- 5. Generating the Keystore

- 6. Tuning Kuali Rice 1.0.3

- A. Installing the Database Management Systems

- B. Example Server Configurations

- C. Building Rice from Source

- D. Setting Up a Load-Balanced Clustered Production Environment

- E. Running Multiple Instances of Rice Within a Single Tomcat Instance

- Glossary

List of Figures

- 3.1. Kuali Rice 0.0.16-test-SNAPSHOT Architectural Diagram

- 3.2. Kuali Service Bus

- 3.3. Supported Service Protocols

- 3.4. KIM Architecture Diagram

- 3.5. KIM Architecture Detail

- 3.6. Kuali Nervous System

- 3.7. KIM Architecture Detail

- 3.8. Conceptual Production Architecture, example 1

- 3.9. Conceptual Production Architecture, example 1

- 3.10. Recommended Conceptual Production Architecture

- 3.11. Recommended Conceptual Production Architecture

- 4.1. Rice Portal Main Menu

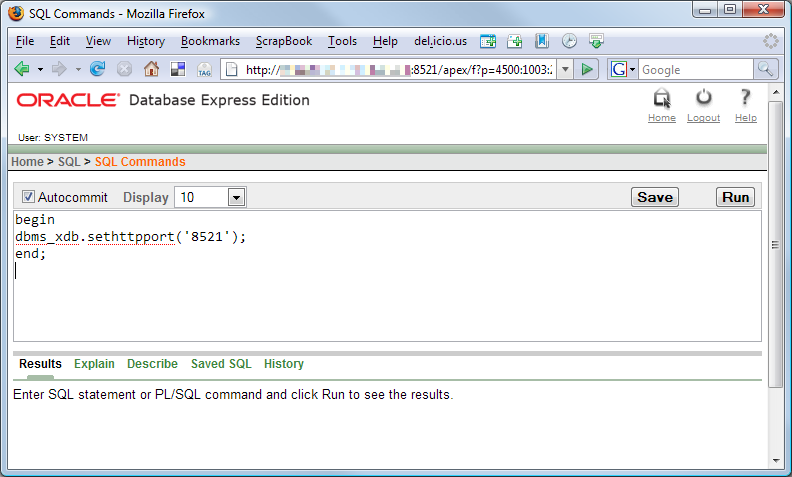

- A.1. Oracle XE admin webapp

Table of Contents

Kuali Rice (also simply known as Rice) is an open source, module-based, enterprise class, set of integrated middleware products that allow both Kuali and non-Kuali applications to be built in an agile fashion so developers can create custom end-user business applications quickly and efficiently. Services are exposed through the Kuali Service Bus (KSB) and can be consumed by other Rice applications.

Rice employs the Service Oriented Architecture (SOA) concept and is structured with both a server-side piece and a client-side piece. This framework allows end developers to build robust systems with common enterprise workflow functionality and with customizable and configurable user interfaces that have a clean and universal look and feel.

On the server side, Kuali Rice is implemented as a group of services within a Servlet container. It can also run as a module within a web server such as Apache. Kuali Rice implements the Java Servlet specification from Sun Microsystems. This allows developers to design software that adds dynamic content to web servers using the Java programming language. Servlets are a server side technology that responds to web clients (typically web browsers) through a request/response paradigm.

On the client side, Kuali Rice has a flexible framework of pieces that can be included in a Rice client application.

The Rice Standalone Server is built on the client-server model and is provided as a web archive file (WAR). The Standalone version allows client applications to be configured to interface with the Rice server.

The designers of Kuali Rice built it with a modular architecture where each module performs a specific function that offers services to applications. The Rice architecture has five major modules:

Kuali Service Bus (KSB)

Kuali Enterprise Workflow (KEW)

Kuali Enterprise Notification (KEN)

Kuali Identity Management (KIM)

Kuali Nervous System (KNS)

Note

The Kuali Nervous System is not administered from the Rice Standalone Server because it is a framework and not an application.

Rice provides a reusable development framework that encourages a simplified approach to developing true business functionality in modular applications.

Application and service developers can focus on solutions to solve business issues rather than on the technology. The Rice framework takes care of complex technical issues so that each application or service that adopts the framework can interoperate with little or no complexity. The framework also simplifies interoperation with services exposed by other applications.

In addition, Rice supports the sophisticated workflow processes typically required in higher education. It addresses workflow processes that involve human interaction (i.e., approval) as part of the flow.

Portions of Kuali are copyrighted by other parties, who are not listed here. Refer to NOTICE.txt and the licenses directory for complete copyright and licensing information. Questions about licensing should be directed to licensing@kuali.org.

Kuali Rice is available from the Kuali Foundation as open source software under the Educational Community License, version 2.0.

Table of Contents

Kuali Rice is available for two types of implementations, Server (also known as client-server) and Bundled (packaged with Kuali applications).

The Server is the most versatile implementation of Rice. It is a web application that can provide services to multiple applications that integrate with Kuali Rice at your institution. The server distribution contains a web archive or WAR file for the Kuali Rice standalone server. The Server distribution is the one you should use when your enterprise wants to run multiple Kuali applications or when you want to integrate other applications with Rice.

When an application bundles all of the Rice functionality (including what is usually handled by the standalone server) into the client application, it’s called a Bundled Distribution. For example, Kuali Financial Systems (KFS) has done this for their past releases. In those KFS releases, you did not need to set up and install a Rice standalone server; the necessary Rice functionality was bundled with KFS.

Bundled Distributions are not recommended for enterprise implementations, but are good for quick start, testing, and demonstrations.

The Source Code Distribution is available if you want to build Rice from scratch and create the standalone or binary libraries yourself.

The Binary Distribution (also known as the client distribution) is a collection of JAR files. It is used when other applications need to use your Rice implementation and you won’t be using the Rice web application. It is designed for embedding Rice and can be used as a set of libraries for client applications.

The Binary Distribution of Kuali Rice is implemented as an application framework consisting of application programming interfaces (APIs), libraries, and the web framework. This allows you to construct a Kuali Rice application. All JARs and web content are included in this version.

In a typical enterprise deployment of Kuali Rice, a Standalone Rice server hosts numerous shared services and provides the most versatility. The composition of Rice contains an application framework — the APIs, Libraries, and web framework that are used to construct a Rice application. You can configure subsequent Rice client applications to interact with these services as needed.

All three distributions, as well as the source code of the latest production release are available at here.

Table of Contents

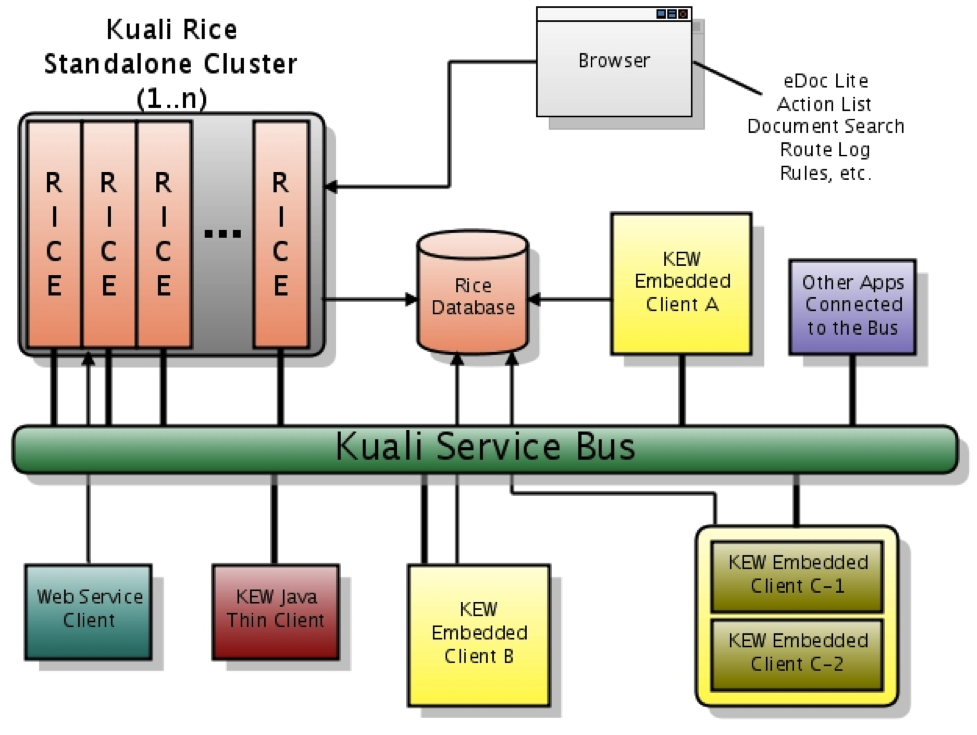

This is a high-level picture of what an Enterprise Deployment of Rice might look like. This diagram includes representations of various client applications interacting with the Rice standalone server:

Kuali Rice has five core modules, linked together by the Kuali Service Bus (KSB):

KSB (Kuali Service Bus )

Kuali Service Bus is a simple service bus geared toward easy service integration in an SOA.

KEW (Kuali Enterprise Workflow)

Kuali Enterprise Workflow provides a common routing and approval engine that facilitates the automation of business processes across the enterprise. KEW was specifically designed to address the requirements of higher education, so it is particularly well suited for routing mediated transactions across departmental boundaries.

KEN (Kuali Enterprise Notification)

Kuali Enterprise Notification acts as a enabler for all university business-related communications by allowing end-users and other systems to push informative messages to the campus community in a secure and consistent manner.

KIM (Kuali Identity Management)

Kuali Identity Management provides central management features for person identity characteristics, groups, roles, permissions, and their relationships to each other. All integration with KIM is accomplished using simple and consistent service APIs (Java or Web Service). KIM is built like all of the Kuali applications with Spring at its core, so that you can implement your own Identity Management (IdM) solutions behind the Service APIs. This provides you with the option to override the reference service implementations with your own to integrate with other Identity and Access Management systems at your enterprise.

KNS (Kuali Nervous System)

Kuali Nervous System is a software development framework that enables developers to quickly build business applications in an efficient and agile fashion. KNS is an abstracted layer of “glue” code that provides developers easy integration with the other Rice components.

Kuali Rice is designed to run in a clustered environment and can be run on virtual machines.

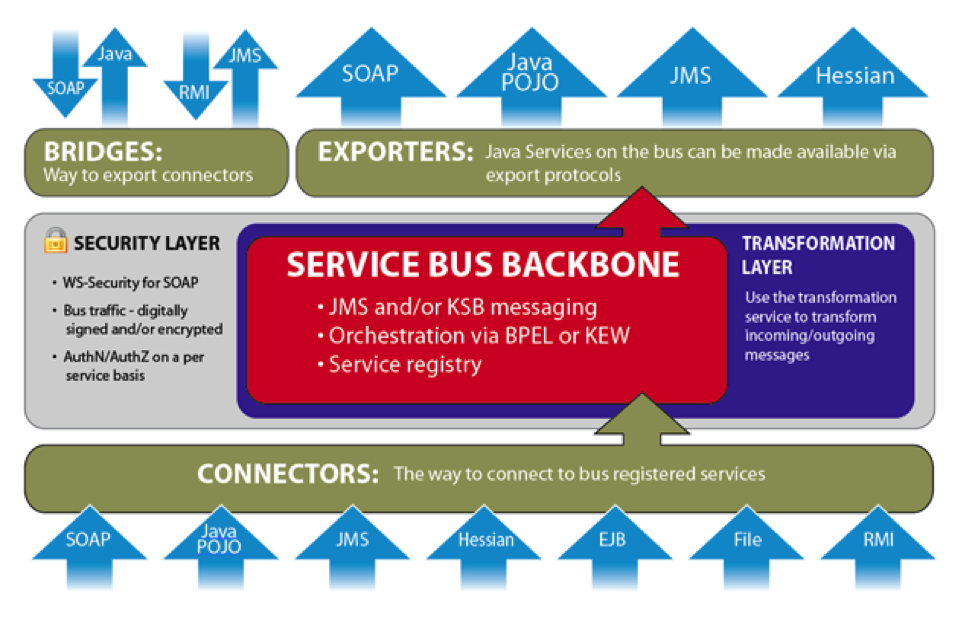

The Kuali Service Bus (KSB) is a lightweight service bus designed so developers can quickly develop and deploy services for remote and local consumption. You deploy services to the bus either using the Spring tool or programmatically. Services must be named when they are deployed to the bus. Services are acquired from the bus using their name.

At the heart of the KSB is a service registry. This registry is a listing of all services available for consumption on the bus. The registry provides the bus with the information necessary to achieve load balancing, failover, and more.

Transactional Asynchronous Messaging – Call services asynchronously to support a 'fire and forget' model of calling services. Messaging participates in any existing JTA transactions (messages are not sent until the current running transaction is committed and are not sent if the transaction is rolled back). This increases the performance of service-calling code because it does not wait for a response.

Synchronous Messaging - Call any service on the bus using a request-response paradigm.

Queue Style Messaging - Execute Java services using message queues. When a message is sent to a queue, only one of the services listening for messages on the queue is given the message.

Topic Style Messaging - Execute Java services using messaging topics. When a message is sent to a topic, all services listening for messages on the topic receive the message.

Quality of Service - This KSB feature determines how queues and topics handle messages with problems. Time-to-live is supported, giving the message a configured amount of time to be handled successfully before exception handling is invoked for that message type. Messages can be given a specified number of retry attempts before exception handling is invoked. An increasing delay separates each calling. Exception handlers can be registered with each queue and topic for custom behavior when messages fail and Quality of Service limits have been reached.

Discovery - Automatically discover services along the bus by service name. You do not need end-point URLs to connect to services.

Reliability - Should problems arise, messages sent to services via queues or synchronous calls automatically fail-over to any other services bound to the same name on the bus. Services that are not available are removed from the bus until they come back online, at which time they will be rediscovered for messaging.

Persisted Callback - Send callback objects with any message. These objects will be called each time a service is “requested.” This provides a mechanism to pass along a message. In this way, deployed services can communicate back to a “callback registrant,” such as an application registering a callback, with application data even as that data is moving through the system.

Primitive Business Activity Monitoring - If turned on, each call to every service, including the parameters passed into that service, is recorded.

Spring-Based Integration - KSB is designed with Spring-based integration in mind. For example, you might make an existing Spring-based POJO available for remote asynchronous calls.

Programmatic Integration - If you do not use Spring configuration, you can configure KSB programmatically. Services can also be added and removed from the bus programmatically at runtime.

Typically, KSB programming is centered on exposing Spring-configured beans to other calling code using a number of different protocols. Using this paradigm, the client developer and the organization can rapidly build and consume services.

Note

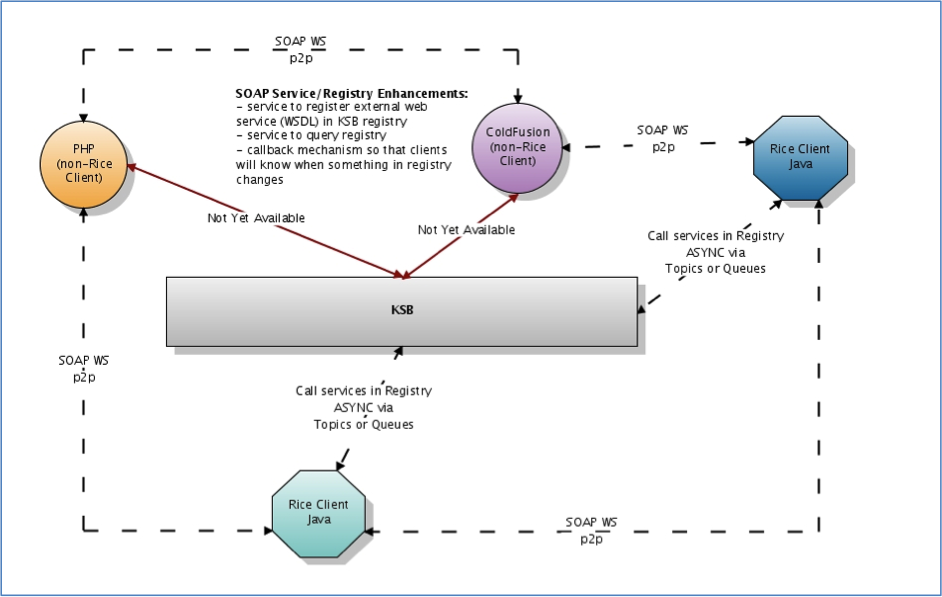

This drawing is conceptual and not representative of true deployment architecture.

Essentially, the KSB is a registry with service-calling behavior on the client end (for Java clients). All policies and behaviors (aysnc vs. sync) are coordinated on the client.

KSB offers clients some very attractive messaging features:

Synchronization of message sending with currently running transaction (In other words, all messages sent during a transaction are ONLY sent if the transaction is successfully committed.)

Failover: If a call to a service comes back with a 404 (or various other network-related errors), the client will try to call other services of the same name on the bus. This is for both sync and async calls.

Load balancing: Clients will round-robin call services of the same name on the bus. Proxy instances are, however, bound to single machines. This is useful if you want to keep a line of communication open to a single machine for long periods of time

Topics and Queues: Used for controlling the execution of services

Persistent messages: When using message persistence, a message cannot be lost. It will be persisted until it is sent.

Message Driven Service Execution: Bind standard JavaBean services to messaging queues for message-driven beans

The Kuali Enterprise Workflow (KEW) is a content-based routing engine. To enter the routing process, a user creates a document from a process definition and submits it to the workflow engine for routing. The engine then makes routing decisions based on the XML content of the document.

KEW is built for educational institutions to use for business transactions in the form of electronic documents that require approval from multiple parties. For example, these types of transactions are capably handled with KEW:

Transfer funds

Hire and terminate employees

Complete and approve timesheets

Drop a course

KEW is a set of services, APIs, and GUIs with these features:

Action List for each user, also known as a user’s work list

Document searching

Route log: Document audit trail

Flexible process definition: Splits, joins, parallel branches, sub-processes, dynamic process generation

Rules engine

Email notification

Notes and attachments

Wide array of pluggable components to customize routing and other pieces of the system

eDocLite: Framework for creating simple documents quickly

Plugin architecture: Packaging and deployment of application plugins or deployment of routing components to the Rice standalone server at runtime

Kuali Enterprise Notification (KEN) acts as a broker for all university business-related communications by allowing end-users and other systems to push informative messages to the campus community in a secure and consistent manner. All notifications process asynchronously and are delivered to a single list where other messages such as workflow-related items (KEW action items) also reside. In addition, end-users can configure their profile to have certain types of messages delivered to other end-points such as email, mobile phones, etc.

Easily leverage its functionality through the KSB or over SOAP

Access a full list of archives and logs so that you can easily find messages that were sent in the past

Eliminate sifting through your email inbox to find what you need

It guarantees delivery of messages, even to large numbers of recipients

A Single List for All Notifications: Find the things you have to do, things you want to know about, and things you need to know about. This includes workflow items from KEW, all in one place.

Eliminate Email Pains: Don't sift through piles of spam to find that one thing you need to do. You control who uses KEN, so there is no spam.

Flexible Content Types: No core programming is needed to customize the fields and data for a notification. You may use XML, XSD, and XSL to dynamically extend, validate, and render new content types.

Multiple Integration Interfaces: Use KEN’s Java services and web services (exposed on the KSB) to send messages from one system to another, or use the Rice generic message-sending form (with workflow built in) to send messages by hand.

Audit Trail: Track exactly who received a notification and when they received it.

Multiple Ways to Notify: All messages are sent to a user's notification list; however, users can also choose to have "ticklers" sent to their email inboxes, their mobile phones, and more. You can also build pluggable "ticklers" using the KEN framework.

Robust Searching and List Capabilities: Search for notifications by multiple fields such as priority, type, senders, and more. Save searches for later, and take actions on your notifications right from your list.

Security: Basic authorization comes out of the box along with single-sign-on. In addition, web service calls support SSL transport encryption and digital signing using X.509 certificates. KEN also allows you to build your own security plugins.

User and Group Management: Basic user and group management are provided, along with hooks for customizing KEN to point at other identity management systems, such as LDAP.

The Kuali Identity Management (KIM) provides identity and access management services to Rice and other applications. All KIM services are available on the service bus with both SOAP and Java serialization endpoints. KIM provides a service layer and a set of GUIs that you can use to maintain identity information.

KIM provides a reference implementation of services. It also allows customization and/or replacement to facilitate integration with institutional services or other third-party identity management solutions. This allows the core KIM services to be overridden piecemeal. For example, you can override the Identity Service, but keep the Role Service.

KIM consists of these services, which encompass its API:

Read-only services:

IdentityService

GroupService

PermissionService

RoleService

ResponsibilityService

AuthenticationService

Update services that allow write operations

A permission service that evaluates permissions: KIM provides plug points for implementing custom logic for permission checking, such as permission checks based on hierarchical data.

A more detailed visual of the KIM architecture:

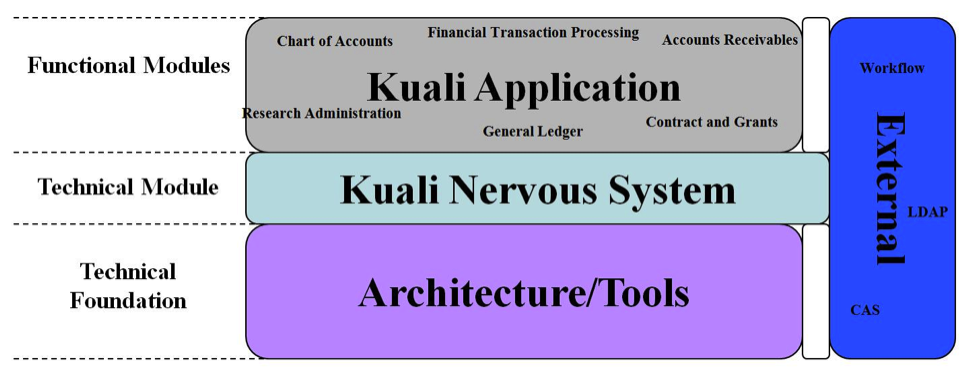

The Kuali Nervous System (KNS) is the core of the Kuali Rice system. It embraces a document- centric (business process) model that uses workflow as a central concept. It is also the web application development framework for Rice and is the core technical module in Rice, leveraging reusable code components to provide functionality.

The Kuali Nervous System is a:

Framework to enforce consistency

Means to adhere to development standards and architectural principles

Stable core for efficient development

Means of reducing the amount of code written through code re-use

Since the builders of the Rice platform constructed it on open source technologies, your scale and use of Rice software determine the layout of the logical and physical hardware you need to support your implementation. Below are several conceptual models for implementation of Rice that are certainly not end solutions. Your solution depends on your implementation scale and budget.

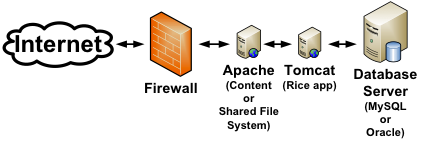

The production platform that you deploy for your implementation can vary quite widely. The first example, the most basic platform structure, would be the most economical solution in terms of hardware. The Tomcat server can serve up all web service and HTTP requests and store all content. (Of course, you could load-balance multiple Tomcat servers across machines.) A picture of the logical structure:

For this architecture, we recommend this minimum:

Server running Tomcat container: Minimum 2 GB main memory

The next example has you offload the web requests and content to an Apache HTTP server in front of the Tomcat server:

For this architecture, we recommend this minimum:

Server running Apache: Minimum 1 GB main memory

Server running Tomcat container: Minimum 2 GB main memory

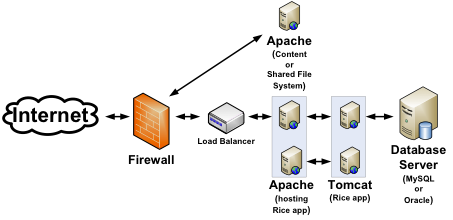

And finally, the recommended solution, where the focus of the environment structure is based upon maximal scaling for the Rice application:

For this architecture, we recommend this minimum:

Load Balancer

Each server running Apache: Minimum 1 GB main memory

Each server running Tomcat container: Minimum 2 GB main memory

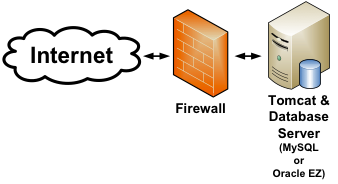

The most basic platform for development has the Tomcat container and a MySQL server running on the same machine as your development tools:

The best option is to use the Tomcat container to serve up web service and HTTP requests. This has the least number of software layers between your development/debugging/IDE environment and the Tomcat container.

Table of Contents

Kuali Rice has the potential to run on most platforms that support a Java development environment (not simply a runtime environment), a servlet container, and an Oracle or MySQL relational database management system (RDBMS).

Warning

Only platforms and configurations that have been tested and are known to work with Rice are described within this guide.

Note that hardware needs may vary depending on the amount of expected load, the operating system being used, and the number of applications that are integrated with Kuali Rice.

Kuali Rice is typically deployed with the database server separate from the application server. Requirements below are for the Rice Standalone application server.

The recommended minimum requirements are as follows:

Processor 1.5 GHz or faster (2 GHz preferred)

1024 MB (1 GB) of RAM or more

100 Mbit/s network card (gigabit preferred)

200 MB of hard disk space (for Tomcat server and web application)

Additional space needed if storing attachments

Since Kuali Rice is written in Java, it should in theory be able to run on any operating system that supports the required version of the Java runtime. However, it has been most actively tested on:

Windows (XP and 7)

Mac OS X (10.6)

Linux (Ubuntu)

Note that while Ubuntu Linux is the distribution most frequently used for testing, other Linux distributions such as Fedora, Red Hat Enterprise Linux, CentOS, Gentoo, and others should also be able to run Kuali Rice.

Additionally, Kuali Rice will likely work on these operating systems, although the software has not been tested here:

Sun Microsystems Solaris

IBM AIX

All of these packages are required on the server with Rice:

Sun Microsystems Java Development Kit (JDK 1.6.x)

Warning

You must use a JDK and not a Java runtime environment (JRE); the JDK you use must be version 1.6.x. Additionally, Rice has not been tested on JDKs other than Sun. So alternative implementations like OpenJDK should be used at your own risk.

Servlet container (Apache Tomcat 5.5+, or Jetty 6.1.1)

Apache Ant 1.8.1

Maven 3

Ensure that the Oracle database you intend to use encodes character data in a UTF variant by default. For Oracle XE, this entails downloading the "Universal" flavor of the binary, which uses AL32UTF8.

Rice runs, and has been tested with the following products:

Oracle 10g

Oracle Database 10g Release 2 (10.2.0.4)

Oracle Database 10g Release 2 (10.2.0.4) JDBC Driver

MySQL

MySQL 5.1.+

MySQL Connector/J (5.1.+)

You can adapt Rice to other standard relational databases (e.g., Sybase, Microsoft SQL Server, DB2, etc.). However, this Installation Guide does not provide information for running Rice with these products.

Table 4.1.

| Software | Download Location |

|---|---|

| Sun Java JDK 1.6.x | http://java.sun.com/javase/downloads/index.jsp |

| Apache Ant 1.7.1 | http://mirror.olnevhost.net/pub/apache/ant/binaries/apache-ant-1.7.1-bin.tar.gz |

| Maven 2.0.9 | http://archive.apache.org/dist/maven/binaries/apache-maven-2.0.9-bin.tar.gz |

| MySQL Connector/J JDBC Driver | http://www.mysql.com/products/connector/j/ |

| Oracle 10g XPress | http://www.oracle.com/technology/products/database/xe/index.html |

| Oracle JDBC DB Driver | http://www.oracle.com/technology/software/tech/java/sqlj_jdbc/htdocs/jdbc_10201.html |

| Squirrel SQL client | http://squirrel-sql.sourceforge.net/ |

| Tomcat 5.5.27 | http://mirrors.homepagle.com/apache/tomcat/tomcat-5/v5.5.27/bin/apache-tomcat-5.5.27.zip |

The Kuali Rice software is available through three different distributions:

Table 4.2.

| Distribution | Description |

|---|---|

| Binary | This distribution consists of all the necessary binaries, supporting files and database schemas and data for running Kuali Rice as a web application or within an embedded client application. |

| Source | The source code and build scripts necessary for compiling and building Rice, a process described in the appendices. |

| Server | Rice in the form of a web application archive (WAR) along with database schemas and data. |

The Kuali Foundation makes the most current version of the Rice software available at: http://rice.kuali.org/download

To install Kuali Rice 1.0.3, follow these steps:

Install a database. Refer to the appendices for detailed instructions on how to do this.

Create a non-privileged user that will run Rice.

Install a JDK

Configure the Operating System.

Install the JDBC drivers.

Set up ImpEx process to create the database schema and populate it.

Obtain the WAR file from the Rice server distribution.

Install and configure a servlet container.

Test the installation

Typically, you use this command to add a user to CentOS:

# useradd –d /home/rice –s `which bash` rice # passwd rice New UNIX password: <enter kualirice> Retype new UNIX password: <enter kualirice> passwd: all authentication tokens updated successfully.

To begin installation with a Kuali Rice provided distribution, just uncompress the software you retrieved from download location at the Rice web site. For the purposes of this guide, we will be focusing on installation of the server distribution.

Uncompress the server distribution downloaded from the Rice site:

Verify that you are logged in as the root user

Change directory to where the distributions are located

# cd /opt/software/distribution

Uncompress the distribution

Follow the steps below to uncompress the Binary distribution:

# mkdir binary # unzip rice-1.0.3-bin.zip -d binary # chmod -R 777 /opt/software

Follow the steps below to uncompress the Server distribution:

# mkdir server # unzip rice-1.0.3-server.zip -d server # chmod -R 777 /opt/software

It is a best practice to modify your system parameters as described here. These are just suggested system parameters. Use your best judgment about how to set up your system.

Verify that you are starting out in the /etc directory:

computername $ pwd /etc

Add this to /etc/profile to setup ant and maven first in the path:

JAVA_HOME=/usr/java/jdk1.6.0_16 CATALINA_OPTS="-Xmx512m -XX:MaxPermSize=256m" ANT_HOME=/usr/local/ant ANT_OPTS="-Xmx1512m -XX:MaxPermSize=192m" MAVEN_HOME=/usr/local/maven MAVEN_OPTS="-Xmx768m -XX:MaxPermSize=128m" GROOVY_HOME=/usr/local/groovy PATH=$JAVA_HOME/bin:$ANT_HOME/bin:$MAVEN_HOME/bin:$GROOVY_HOME/bin:$PATH export JAVA_HOME CATALINA_OPTS ANT_HOME ANT_OPTS MAVEN_OPT GROOVY_HOME

After you have completed your update of the /etc/profile file, please execute this command to immediately update your profile with the changes from above:

source /etc/profile

Warning

You must install the JDBC (Java Database Connectivity) driver for MySQL and Oracle to satisfy the requirement for the structure of the Ant target in the build process.

/java/drivers is a hard coded directory that the Rice scripts use as a default directory in which to search for drivers when the installation scripts are running.

Kuali Rice uses the MySQL Connector/J product as the native JDBC driver.

You can obtain the driver from the MySQL website Once you have downloaded the JDBC driver that corresponds to your version of MySQL, copy it to /java/drivers.

Kuali Rice uses the standard Oracle JDBC driver as the native JDBC driver.

You must download the Oracle JDBC from the Oracle website directly due to licensing restrictions. Please refer to the Sources for Required Software section in this Guide to find the download location for this software. Once you have downloaded the JDBC driver, copy it to /java/drivers.

The ImpEx tool is a Kuali-developed application which is based on Apache Torque. It reads in database structure and data from XML files in a platform independent way and then creates the resulting database in either Oracle or MySQL.

The ImpEx tool is included in the Rice binary and server distributions. It is located in the database/database-impex directory of the relevant archive. If you are building Rice from source, the ImpEx tool can be acquired from Subversion:

svn co https://test.kuali.org/svn/kul-cfg-dbs/trunk

For Oracle users, you must run two scripts on your Oracle database before continuing on to the ImpEx development database ingestion process. Please contact your DBA and have him or her run these two setup scripts as the Oracle SYS user. These scripts will be in the database/database-impex distribution (or the impex subdirectory if you checked out the tool from svn). These are the commands, and they must be run in this order:

create_kul_developer.sql (Creates the KUL_DEVELOPER role and applies grants)

system_grants.sql (Creates the KULUSERMAINT user for Rice Oracle system maintenance)

Note

Please inform your DBA that the above scripts must be run under the Oracle SYS account.

After your DBA runs the above scripts, you must run two more scripts as the kulusermaint:

create_admin_user.sql (Creates the kuluser_admin user)

kuluser_maint_pk.sql (Creates the package containing the functions that the Rice system uses to do Oracle system maintenance)

The Rice team released four different sets of data with Rice 1.0.3. The server distribution contains only the first two listed, whereas the binary distribution contains all four. You can find these datasets in the /database directory of the relevant archive:

bootstrap-server-dataset – This is the core dataset. The Rice standalone server cannot function properly without this data.

demo-server-dataset – This is a superset of the bootstrap-server-dataset which, in addition to necessary bootstrap data, includes sample security groups and workflow documents.

bootstrap-client-dataset – This dataset is necessary for creating a Rice client application

demo-client-dataset – This is a superset of the data in the bootstrap-client-dataset. In addition to necessary bootstrap data, it includes tables used by some of the Rice sample client applications.

This guide does not deal with developing a client application; for more information on creating a Rice client application and the use of the client datasets, see the Global Technical Reference Guide.

After you have uncompressed the distribution (Binary or Server) you will use for Rice, login as the user that you use to run the Tomcat server:

1. Go to the sub-directory, <directory where uncompressed>/database/database-impex. This directory is the same for each of the distributions.

Copy the impex-build.properties.sample file to your home directory, renaming the file to impex-build.properties.

Configure the drivers.directory, the directory where the MySQL and Oracle JDBC drivers are located.

Configure import.torque.database.*. Set the user and password to the user under which you want to run the Rice software.

Configure import.admin.user and import.admin.password.

Set import.admin.url as follows:

import.admin.url=jdbc:mysql://localMySQLServerComputerName:MySQL-port-number/

Example: import.admin.url=jdbc:mysql://localhost:3306/

Set import.admin.url as follows:

import.admin.url=jdbc:mysql://remoteMySQLServerComputerName:MySQL-port-number/

Example: import.admin.url=jdbc:mysql://192.168.25.22:3306/

To setup the database from one of the distributions, change directories to /database/database-impex and use these commands:

ant create-schema ant import

Configure the drivers.directory, the directory where the MySQL and Oracle JDBC drivers are located.

Configure import.torque.database.* for the Oracle setup. Set the user and password to the user under which you want to run the Rice software.

Configure import.admin.user and import.admin.password.

import.admin.url= ${import.torque.database.url}Configure oracle.usermaint.*

To setup the database from one of the distributions, use these Ant commands:

ant create-schema ant import

Once you have downloaded Tomcat , please install it using these steps:

Log in as root

Copy or download the file apache-tomcat-5.5.27.zip to /opt/software/tomcat using this code:

cd /opt/software/Tomcat unzip apache-tomcat-5.5.27.zip -d /usr/local ln -s /usr/local/apache-tomcat-5.5.27 /usr/local/tomcat chown -R rice:rice /usr/local/apache-tomcat-5.5.27 cd /usr/local/tomcat/bin chmod -R 755 *.sh su - rice cd /usr/local/tomcat/bin ./startup.sh

You should see something like this:

Using CATALINA_BASE: /usr/local/tomcat Using CATALINA_HOME: /usr/local/tomcat Using CATALINA_TMPDIR: /usr/local/tomcat/temp Using JRE_HOME: /usr/java/jdk1.6.0_16

Now that Tomcat is running:

To test that Tomcat came up successfully, try to browse to http:// yourlocalip:8080.

If you successfully browsed to http://yourlocalip:8080, then execute this command as the rice user:

./shutdown.sh Using CATALINA_BASE: /usr/local/tomcat Using CATALINA_HOME: /usr/local/tomcat Using CATALINA_TMPDIR: /usr/local/tomcat/temp Using JRE_HOME: /usr/java/jdk1.6.0_16

Warning

At times, Tomcat can have a session related problem with OJB where if you stop the server it won’t start again. This can be fixed by deleting the SESSIONS.ser file in the %TOMCAT_HOME%/work/Catalina/%host name%/%webapp% directory

This can be prevented by setting the saveOnRestart property to be false in the web application’s context.xml file as documented here: http://tomcat.apache.org/tomcat-5.5-doc/config/manager.html.

Copy the kr-dev.war file from the base directory of the server distribution to the directory that contains web applications in your servlet container. For Apache Tomcat 5.5.x, this is [Tomcat-root-directory]/webapps.

Copy the database-specific JDBC driver to the [Tomcat-root-directory]/common/lib.

Login as the root user:

su – rice

MySQL

cp -p /java/drivers/mysql-connector-java-5.1.5-bin.jar /usr/local/tomcat/common/lib

Oracle

cp -p /java/drivers/ojdbc14.jar /usr/local/tomcat/common/lib

By default when it starts, Rice attempts to read the rice-config.xml configuration file from the paths in this order:

/usr/local/rice/rice-config.xml

${rice.base}../../../conf/rice-config.xml

${rice.base}../../conf/rice-config.xml

${additional.config.locations}

The value for rice.base is calculated using different locations until a valid location is found. Kuali calculates it using these locations in this sequence:

ServletContext.getRealPath("/")

catalina.base system property

The current working directory

An example rice-config.xml file is included in the server distribution under web/src/main/config/example-config

To get the rice-config.xml and other Rice configuration files into their correct locations, follow these steps:

Login as root:

mkdir /usr/local/rice chown rice:rice /usr/local/rice chmod 755 /usr/local/rice cd /opt/software/kuali/src/rice-release-1-0-2-br/web/src/main/config/example-config/ cp -p rice-config.xml /usr/local/rice cp -p log4j.properties /usr/local/rice cd /usr/local/rice chown rice:rice log4j.properties chown rice:rice rice-config.xml su - rice cd /usr/local/rice

Modify the database parameters in the rice-config.xml file. The values should conform to the values you used with the ImpEx tool (listed in the impex-build.properties file).

datasource.url=jdbc:mysql://localhost:3306/rice datasource.username=rice datasource.password=kualirice datasource.url=jdbc:mysql://remoteMySQLServerComputerName:3306/rice datasource.username=rice datasource.password=kualirice

If you are using Oracle, the JDBC URL will have this general form:

datasource.url=jdbc:oracle:thin:@remoteMySQLServerComputerName:1521:ORACLE_SID

At this point, you are ready to try to bring up the Tomcat server with the Rice web application:

cd /usr/local/tomcat/bin ./startup.sh

Check if Tomcat and Rice started successfully:

cd /usr/local/tomcat/logs tail -n 500 -f catalina.out

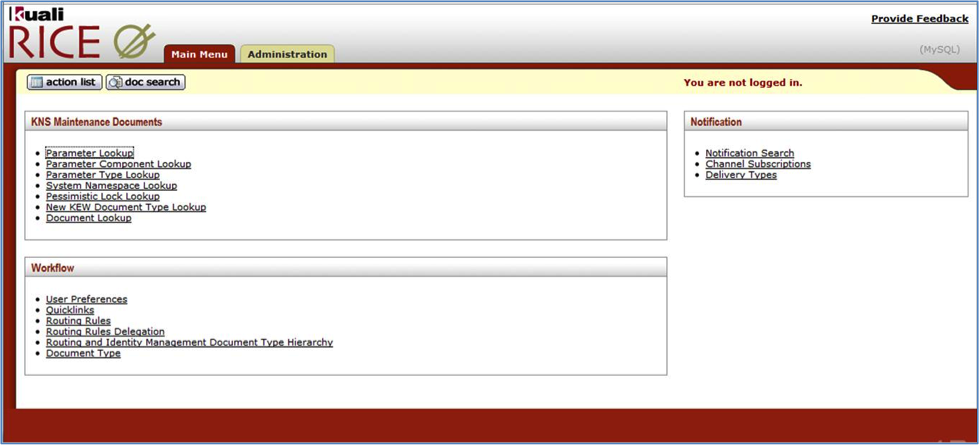

If your Rice server started up successfully, browse to the site http://yourlocalip:8080/kr-dev. You should see the Rice portal screen which will look something like this:

The tables below have the basic set of parameters for rice-config.xml that you need to get an instance of Rice running. Please use these tables as a beginning reference to modify your rice-config.xml file.

Warning

Make sure the application.url and database user name and password are set correctly.

Table 4.3. Core

| Parameter | Description | Examples or Values |

|---|---|---|

| application.url | The external URL used to access the Rice web interface; edit only the fully-qualified domain name and port of the server | http://yourlocalip:8080/kuali-rice-url |

| app.context.name | Context name of the web application

| kuali-rice-url (This value should not be changed) |

| log4j.settings.path | Path to log4j.properties file. If the file does not exist, you must create it. | /usr/local/rice/log4j.properties |

| log4j.settings.reloadInterval | interval (in minutes) to check for changes to the log4j.properties file | 5 |

| mail.smtp.host | SMTP host name or IP (This param is not in the default config.) | localhost |

| config.location | Location of configuration file to load environment-specific configuration parameters (This param is not in the default config.) | /usr/local/rice/rice-config-${environment}.xml |

| sample.enabled | Enable the sample application | boolean |

Table 4.4. Database

| Parameter | Description | Examples or Values |

|---|---|---|

| datasource.ojb.platform | Name of OJB platform to use for the database | Oracle9i or MySQL |

| datasource.platform | Rice platform implementation for the database |

|

| datasource.driver.name | JDBC driver for the database |

|

| datasource.username | User name for connecting to the server database | rice |

| datasource.password | Password for connecting to the server database | |

| datasource.url | JDBC URL of database to connect to |

|

| datasource.pool.minSize | Minimum number of connections to hold in the pool | an integer value suitable for your environment |

| datasource.pool.maxSize | Maximum number of connections to allocate in the pool | an integer value suitable for your environment |

| datasource.pool.maxWait | Maximum amount of time (in ms) to wait for a connection from the pool | 10000 |

| datasource.pool.validationQuery | Query to validate connections from the database | select 1 from dual |

Table 4.5. KSB

| Parameter | Description | Examples or Values |

|---|---|---|

| serviceServletUrl | URL that maps to the KSBDispatcherServlet (include a trailing slash); This param is not in the default config. | |

| keystore.file | Path to the keystore file to use for security | /usr/local/rice/rice.keystore |

| keystore.alias | Alias of the standalone server's key | see section entitled Generating the Keystore |

| keystore.password | Password to access the keystore and the server's key | see section entitled Generating the Keystore |

Table 4.6. KEN

| Parameter | Description | Examples or Values |

|---|---|---|

| notification.basewebappurl | Base URL of the KEN web application (This param is not in the default config.) |

Table 4.7. KEW

| Parameter | Description | Examples or Values |

|---|---|---|

| workflow.url | URL to the KEW web module | ${application.url}/kew |

| plugin.dir | Directory from which plugins will be loaded | /usr/local/rice/plugins |

| attachment.dir.location | Directory where attachments will be stored (This param is not in the default config.) |

Table of Contents

For client applications to consume secured services hosted from a Rice server, you must generate a keystore. As an initial setup, you can use the keystore provided by Rice. There are three ways to get this keystore:

If you are doing a source code build of Rice, it is in the directory <source root>/security and it has a file name of rice.keystore

Note

r1c3pw is the password used for the example provided.

The keystore is also located in the server distribution under the security directory.

Note

keypass and storepass should be the same. r1c3pw is the password used for the example provided

You can generate the keystore yourself. Please refer to the KSB Technical Reference Guide for the steps to accomplish this.

You must have these params in the xml config to allow KSB to use the keystore:

<param name="keystore.file">/usr/local/rice/rice.keystore</param>

<param name="keystore.alias">rice</param>

<param name="keystore.password">r1c3pw</param>

keystore.file - The location of the keystore

keystore.alias - The alias used in creating the keystore above

keystore.password - This is the password of the alias AND the keystore. This assumes that the keystore is set up so that these are the same.

Table of Contents

Table of Contents

Kuali Rice was developed using two relational database management systems:

MySQL

Oracle

Install the MySQL database management system.

If you are installing MySQL after the initial operating system installation, use the RHEL update manager or yum on CentOS and install these packages:

mysql

mysql-server

If you did not install MySQL with the distribution, execute this command line (this assumes that you installed a CentOS 5.3 distribution):

Check if MySQL is installed:

rpm -qa | grep mysql

If the command has the following text in the results, then go down to the step where you check if MySQL is set to start at the appropriate run-levels:

mysql-server-5.1.xx-x.el5 mysql-5.1.xx-x.el5

If the command returns no results, no MySQL packages are installed. In that case, do this:

yum -y install mysql yum -y install mysql-server

Next check that the MySQL server is set to start at the appropriate run-levels:

chkconfig --list | grep mysqld mysqld 0:off 1:off 2:off 3:off 4:off 5:off 6:off

If the word “on” does not appear after the 3, 4, and 5, the MySQL server is set to be started manually. To set the MySQL server to start at the appropriate run-levels, execute this:

chkconfig --level 345 mysqld on

Now, double check the run-levels for the MySQL server:

chkconfig --list | grep mysqld mysqld 0:off 1:off 2:off 3:on 4:on 5:on 6:off

Check if the MySQL server has been started automatically:

ps -ef | grep mysql

If you get the following output:

root 4829 3577 0 22:57 pts/1 00:00:00 grep mysql

Then, start the MySQL daemon with the following command:

/etc/init.d/mysqld start

You should see results similar to this:

Initializing MySQL database: Installing MySQL system tables... OK Filling help tables... OK To start mysqld at boot time you have to copy support-files/mysql.server to the right place for your system PLEASE REMEMBER TO SET A PASSWORD FOR THE MySQL root USER ! To do so, start the server, then issue the following commands: /usr/bin/mysqladmin -u root password 'new-password' /usr/bin/mysqladmin -u root -h krice password 'new-password' See the manual for more instructions. You can start the MySQL daemon with: cd /usr ; /usr/bin/mysqld_safe & You can test the MySQL daemon with mysql-test-run.pl cd mysql-test ; perl mysql-test-run.pl Please report any problems with the /usr/bin/mysqlbug script! The latest information about MySQL is available on the web at http://www.mysql.com Support MySQL by buying support/licenses at http://shop.mysql.com [ OK ] Starting MySQL: [ OK ]

These instructions assume that this is a fresh installation of Linux and NO MySQL server has been installed on the computer. Use the RHEL update manager or yum on CentOS and install this package:

mysql

mysql-server

If you did not install MySQL with the distribution, execute this command line (this assumes that you installed a CentOS 5.3 distribution):

Check if MySQL is installed:

rpm -qa | grep mysql

If the command has the following text in the results, then go down to the step where you check if MySQL is set to start at the appropriate run-levels:

mysql-server-5.1.xx-x.el5 mysql-5.1.xx-x.el5

If the command returns no results, no MySQL packages are installed. In that case, do this:

yum -y install mysql yum -y install mysql-server

Next check that the MySQL server is set to start at the appropriate run-levels:

chkconfig --list | grep mysqld mysqld 0:off 1:off 2:off 3:off 4:off 5:off 6:off

If the word “on” does not appear after the 3, 4, and 5, the MySQL server is set to be started manually. To set the MySQL server to start at the appropriate run-levels, execute this:

chkconfig --level 345 mysqld on

Now, double check the run-levels for the MySQL server:

chkconfig --list | grep mysqld mysqld 0:off 1:off 2:off 3:on 4:on 5:on 6:off

Check if the MySQL server has been started automatically:

ps -ef | grep mysql

If you get the following output:

root 4829 3577 0 22:57 pts/1 00:00:00 grep mysql

Then, start the MySQL daemon with the following command:

/etc/init.d/mysqld start

You should see results similar to this:

Initializing MySQL database: Installing MySQL system tables... OK Filling help tables... OK

To start mysqld at boot time you have to copy support-files/mysql.server to the right place for your system.

Warning

Remember to set a password for the MySQL root User!

To do so, start the server and then issue the following commands:

/usr/bin/mysqladmin -u root password 'new-password'

/usr/bin/mysqladmin -u root -h Rice password 'new-password'

See the manual for more instructions.

You can start the MySQL daemon with:

cd /usr ; /usr/bin/mysqld_safe &

You can test the MySQL daemon with mysql-test-run.pl

cd mysql-test ; perl mysql-test-run.pl

Please report any problems with the /usr/bin/mysqlbug script!

The latest information about MySQL is available on the web at

http://www.mysql.com

Support MySQL by buying support/licenses at http://shop.mysql.com

[ OK ]

Starting MySQL: [ OK ]

This completes the MySQL server installation. Next, you install the MySQL client on the Rice computer.

Log onto your MySQL/Rice computer as the root user. If you are installing MySQL after the initial operating system installation, use the RHEL update manager or yum on CentOS and install the MySQL package.

If you did not install MySQL with the distribution, execute this in the command line (this assumes that you installed a CentOS 5.3 distribution):

Check if MySQL is installed:

rpm –qa | grep mysql

If the command has this text in the results, then go to the second step after this:

mysql-5.1.xx-x.el5

If the command returns no results, no MySQL packages are installed. In that case, do this:

yum -y install mysql

→ Now, edit the /etc/hosts file and enter the IP address and the host name of the computer where the MySQL server is located. In the following example, kmysql is name of the MySQL server:

<ip address of mysql server> kmysql

Once you have installed MySQL, ensure that MySQL is running by performing this on the computer where the MySQL server is running:

mysqladmin -u root -p version Enter password: [hit return, enter nothing]

Warning

If you see the following text, your MySQL server is NOT running:

mysqladmin: connect to server at ‘computername’ failed error: ‘Can’t connect to local MySQL server through socket ‘/var/lib/mysql/mysql.sock’ (2)’ Check that mysqld is running and that the socket ‘/var/lib/mysql/mysql.sock’ exists!

If your MySQL server is NOT running, execute this on the computer as root where the MySQL server is installed:

/etc/init.d/mysqld start

If you see something similar to this, your MySQL server is running:

mysqladmin Ver 8.41 Distrib 5.0.45, for redhat-linux-gnu on i686 Copyright (C) 2000-2006 MySQL AB This software comes with ABSOLUTELY NO WARRANTY. This is free software, and you are welcome to modify and redistribute it under the GPL license Server version 5.0.45 Protocol version 10 Connection Localhost via UNIX socket UNIX socket /var/lib/mysql/mysql.sock Uptime: 34 sec Threads: 1 Questions: 1 Slow queries: 0 Opens: 12 Flush tables: 1 Open tables: 6 Queries per second avg: 0.029

If this is a new MySQL install, you have to set the initial password. To do so after you have started the MySQL daemon, execute this command:

mysqladmin –u root password ‘new-password’

After initial installation, you must set the MySQL root password. This applies to all platforms: Standalone and Developer Platform and Production Platform. To set the MySQL root password, do this on the computer where the MySQL server is running (substitute the name of the machine for ‘computername’):

mysqladmin -u root password ‘kualirice’ mysql -u root --password=”kualirice” mysql> use mysql mysql> set password for ‘root’@’localhost’=password(‘kualirice’); mysql> set password for ‘root’@’127.0.0.1’=password(‘kualirice’); mysql> set password for ‘root’@’computername’=password(‘kualirice’); mysql> mysql> grant all on *.* to ‘root’@’localhost’ with grant option; mysql> grant all on *.* to ‘root’@’127.0.0.1’ with grant option; mysql> grant all on *.* to ‘root’@’computername’ with grant option; mysql> mysql> grant create user on *.* to ‘root’@’localhost’ with grant option; mysql> grant create user on *.* to ‘root’@’127.0.0.1’ with grant option; mysql> grant create user on *.* to ‘root’@’computername’ with grant option; mysql> mysql> quit

If your MySQL server is running remotely (this is usually true for the Production Platform), do this on the computer where the MySQL server is running:

mysql -u root --password=”kualirice” mysql> use mysql mysql> create user ‘root’@’client_computername’; mysql> mysql> set password for ‘root’@’client_computername’=password(‘kualirice’); mysql> mysql> grant all on *.* to ‘root’@’client_computername’ with grant option; mysql> mysql> grant create user on *.* to ‘root’@’client_computername’ with grant option; mysql> mysql> quit

When you install MySQL, you must decide what port number the database will use for communications. The default port is 3306. If you decide to have MySQL communicate on a port other than the default, please refer to the MySQL documentation to determine how to change the port. These instructions assume for Quick Start Recommended Best Practice sections that the MySQL communications port remains at the default value of 3306.

Please ensure your MySQL server has the following settings at a minimum. If not, set them and then restart your MySQL daemon after modifying the configuration file, my.cnf.

Note

These settings reflect a CentOS 5.3 distribution using the distribution-installed MySQL packages. They are stored in /etc/my.cnf.

Only the root user can change these settings, and they should only be changed when the database server is not running.

[mysqld] max_allowed_packet=20M transaction-isolation=READ-COMMITTED lower_case_table_names=1 max_connections=1000 innodb_locks_unsafe_for_binlog=1

Warning

It is very important to verify that the default transaction isolation is set to READ-COMMITTED. KEW uses some ‘SELECT ... FOR UPDATE’ statements that do NOT function properly with the default MySQL isolation of REPEATABLE-READ.

innodb_locks_unsafe_for_binlog=1 is only necessary if you are running MySQL 5.0.x. This behavior has been changed in MySQL 5.1+ so that, in 5.1+, this command is NOT necessary as long as you specify READ-COMMITTED transaction isolation.

3. If you make the changes to your MySQL configuration specified above, you will have to restart your MySQL server for these changes to take effect. You can restart your MySQL daemon by executing this command:

/etc/init.d/mysqld restart

Optional: To run the database completely on your local machine, we recommend installing Oracle 10g Express (XE). Please refer to the Sources for Required Software section of this Installation Guide to find the download location for this software.

By default, OracleXE registers a web user interface on port 8080. This is the same port that the standalone version of Rice is preconfigured to use. To avoid a port conflict, you must change the port that the OracleXE web user interface uses with the Oracle XE admin webapp:

If you prefer, you can use the Oracle SQL tool described here to change the OracleXE web user interface port: http://daust.blogspot.com/2006/01/xe-changing-default-http-port.html

Optional: To connect to the supporting Oracle database (i.e., run scripts, view database tables, etc.), we recommend installing the Squirrel SQL client. Please refer to the Sources for Required Software section to find the download location for this software.

The Rice SQL files use slash ‘/‘ as the statement delimiter. You may have to configure your SQL client appropriately so it can run the Rice SQL. In SQuirreL, you do this in Session->Session Properties->SQL->Statement Separator.

Please edit your hosts file with an entry to refer to your Oracle database. When this Installation Guide refers to the Oracle database host server, it will be referred to in the examples as koracle.

Now edit the hosts file and add this:

<ip address of mysql server> koracle

Table of Contents

An example setup of a single Tomcat server running MySQL server:

CentOS v5.3 x64

4 GB Ram

Intel Q6600 Quad Core Processor or better

1 TB RAID 1 configuration – SATA II 3.0 Gbps

Apache

Tomcat

MySQL 5.1.x

An example of a multiple server configuration:

CentOS v5.3 x64

Intel (64 bit architecture)

1 GB Ram

80 GB RAID 1 configuration - SATA II 3.0 Gbps

Apache

Tomcat connector

CentOS v5.3 x64

Intel Q6600 Quad Core Processor or better

4 GB Ram

80 GB RAID 1 configuration – SATA II 3.0 Gbps

Tomcat

Table of Contents

Warning

When you install Java on the server to run Kuali Rice 0.0.16-test-SNAPSHOT, please make a note of the installation directory. You must have this information to configure the other Rice products.

Note

Version 1.0.0 of Rice was compiled with Java 5 and maintained source code compatibility with Java 5 as well. Starting with the 1.0.1.1 release, Rice is now being compiled using Java 6, although the source code still remains compatible with Java 5. It is recommended that client applications begin using Java 6 for compiling and running Rice.

In a prior step, you should have uploaded the Java installation file, jdk-1_5_0_18-linux-i586-rpm.bin, to the directory, /opt/software/java. Change your current directory to that directory.

cd /opt/software/java

Change the file to have executable attributes.

chmod 777 *

Run the file with this command:

./jdk-1_5_0_18-linux-i586-rpm.bin

This puts your Java JDK software in /usr/java/jdk1.6.0_16/.

Note

This directory is used throughout the rest of the Quick Start Recommended Best Practices sections in this Installation Guide as the Java home directory

Warning

Using a default IBM JDK will result in the failure of Rice to startup do to incompatibilities between cryptography packages. In order to make the IBM JDK work with Rice, the bcprov-*.jar needs to be removed from the classpath.

Note

This step should not be necessary for binary and server distributions.

Rice is sensitive to the versions of Ant and Maven that you use.

Please check the requirements for Rice and then verify, install, and use the required versions.

These software packages can be installed in any directory, as long as their bin directories are specified in the path first.

Install Ant and Maven to these directories:

Ant: /usr/local/apache-ant-1.7.1

Change your current directory to the directory where the Ant software zip is located, /opt/software/ant.

Uncompress the Ant zip file.

Create a symbolic link to /usr/local/ apache-ant-1.7.1 in /usr/local.

For example:

cd /opt/software/ant tar xvfz apache-ant-1.7.1-bin.tar.gz -C /usr/local ln -s /usr/local/apache-ant-1.7.1 /usr/local/ant

Maven: /usr/local/maven

Change your current directory to the directory where the Maven software zip is located, /opt/software/maven.

Uncompress the Maven zip file.

Create a symbolic link to /usr/local/apache-maven-2.0.9 in /usr/local.

For example:

cd /opt/software/maven tar xvfz apache-maven-2.0.9-bin.tar.gz -C /usr/local ln -s /usr/local/apache-maven-2.0.9 /usr/local/maven

Please verify that you have installed the subversion client. As long as your Linux distribution has a subversion client that meets the required version for Rice, this should be sufficient. Please refer to the Sources for Required Software section of this Installation Guide for the download location and the required subversion client software version.

You can verify that you have the subversion client with this command:

rpm -qa | grep sub

If you have the subversion client installed, you will see something similar to this:

subversion-1.4.2-4.el5

After you have downloaded the ImpEx tool from the source code repository, log in as the non-authoritative user that you use to run the Tomcat server:

Go to the sub-directory, <downloaded dir>/trunk/impex, from which you downloaded the kul-cfg-dbs module.

Copy the impex-build.properties.sample file to your home directory, renaming the file to impex-build.properties.

Then, configure the file, impex-build.properties, for the Ant utilities to set up the database.

For retrieving the most current Rice database, verify that these parameters are set to:

svnroot=https://test.kuali.org/svn/

svn.module =rice-cfg-dbs

svn.base= branches/rice-release-1-0-2-br

torque.schema.dir=<root or where you wish to download dev dbs>/${svn.module}

torque.sql.dir=${torque.schema.dir}/sql

# then, to overlay a KFS/KC/KS database on the base rice database, use the parameters below

# If these parameters are commented out, the impex process will only use the information above

#svnroot.2=https://test.kuali.org/svn/

#svn.module.2=kfs-cfg-dbs

#svn.base.2=trunk

#torque.schema.dir.2=../../${svn.module.2}

#torque.sql.dir.2=${torque.schema.dir.2}/sqland verify that the second set of svn parameters (svnroot.2, svn.module.2) are commented out or deleted for a Rice only/non-Kuali Financials, Kuali Student or Kuali Coeus installation.

For retrieving the most current Rice database, verify that these parameters are set to:

svnroot=https://test.kuali.org/svn/

svn.module =rice-cfg-dbs

svn.base= branches/rice-release-1-0-2-br

torque.schema.dir=<root or where you wish to download dev dbs>/${svn.module}

torque.sql.dir=${torque.schema.dir}/sql

# then, to overlay a KFS/KC/KS database on the base rice database, use the parameters below

# If these parameters are commented out, the impex process will only use the information above

#svnroot.2=https://test.kuali.org/svn/

#svn.module.2=kfs-cfg-dbs

#svn.base.2=trunk

#torque.schema.dir.2=../../${svn.module.2}

#torque.sql.dir.2=${torque.schema.dir.2}/sqlVerify that the second set of svn parameters (svnroot.2, svn.module.2) are commented out or deleted for a Rice only/non-Kuali Financials, Kuali Student or Kuali Coeus installation.

Ant must be installed. (It should be already.)

Maven must be installed. (It should be already.)

Subversion must be installed.

Retrieve the source code from Kuali: Download Kuali source zip distribution from the Kuali website or retrieve the source code from the Subversion repository. You can find both of these in the Recommended Software Sources section.

To begin working with the source code:

To work with one of the Kuali distributions: i. Unzip the software into /opt/software/kuali/src OR

Check out the source code from the Subversion repository.

To retrieve and compile the source code from the source code repository, log in as root and enter this:

cd /opt/software/kuali mkdir src cd src svn co https://test.kuali.org/svn/rice/branches/rice-release-1-0-2-br/ cd .. pwd /opt/software/kuali chmod -R 777 /opt/software/kuali/src su - rice cd ~rice → JUST TO VERIFY YOU ARE IN RICE’S HOME DIRECTORYTo compile the source code from the Binary distribution, first uncompress the software:

cd /opt/software/distribution/ mkdir src unzip rice-1.0.3-src.zip -d src chmod -R 777 /opt/software su - rice cd ~rice → JUST TO VERIFY YOU ARE IN RICE’S HOME DIRECTORY

Create a file with VI named kuali-build.properties. This file should be in the root directory of the non-authoritative user that runs the Tomcat server.

The contents should be:

maven.home.directory=/root/of/maven/installation… maven.home.directory=/usr/local/maven

Change directory:

For the source code from the Subversion repository:

cd /opt/software/kuali/src/rice-release-1-0-2-br

For the source code from the Binary distribution:

cd /opt/software/distribution/src

Install the Oracle JDBC driver into the Maven repository.

Warning

You must run the Ant command to install the Oracle JDBC into the Maven repository from the root of the source code directory. In the Quick Start Recommended Best Practices sections, use these directories:

For the source code repository, checkout: /opt/software/kuali/src/rice-release-1-0-2-br

For the source code distribution: /opt/software/distribution/src

An Apache Ant script called install-oracle-jar installs this dependency; however, due to licensing restrictions you must download the driver from the Oracle website. Please refer to the Sources for Required Software section to find the download location for this software.

Once you have downloaded the JDBC driver, copy it to the /java/drivers directory. The Apache Ant script, located in the source code directory, will look for ojdbc14.jar in {java root}/drivers and install the necessary file.

To install the Oracle JDBC driver in the Maven repository, run this command:

ant install-oracle-jar

Default directory - /java/drivers – Ant looks there as a default. This can be overridden by executing this command:

ant -Ddrivers.directory=/my/better/directory install-oracle-jar

To build the WAR file from source, enter:

ant dist-war

At this point, you have built the WAR file. It is in a subdirectory called target. The WAR file is named rice-dev.war.

To verify that the WAR file was built:

cd /opt/software/kuali/src/rice-release-1-0-2-br/target ls -la total 44972 drwxrwxr-x 3 rice rice 4096 Jun 1 00:11 . drwxrwxrwx 19 root root 4096 May 31 23:59 .. drwxrwxr-x 3 rice rice 4096 Jun 1 00:11 ant-build -rw-rw-r-- 1 rice rice 45987663 Jun 1 00:11 kr-dev.war

Copy the WAR file from the target subdirectory to the Tomcat webapps directory.

cp -p kr-dev.war /usr/local/tomcat/webapps/kualirice.war

Note

These details are repeated from the previous section on running a productionplatform

This describes how to set up Rice instances for a load-balanced production environment across multiple computers.

The configuration parameter ${environment} must be set to the text: prd

When the configuration parameter ${environment} is set to prd, the code triggers:

Sending email to specified individuals

Turning off some of the Rice “back doors”

The high-level process for creating multiple Rice instances:

Ensure that these are set up properly so no additional configuration is needed during installation:

Quartz is configured properly for clustering (there are various settings that make this possible).

The initial software setup has the proper configuration to support a clustered production environment.

Rice’s initial settings are in the file, common-config-defaults.xml.

Here are some of the parameters in the common-config-defaults.xml that setup Quartz for clustering:

<param name="useQuartzDatabase" override="false">true</param> <param name="ksb.org.quartz.scheduler.instanceId” override="false">AUTO</param> <param name="ksb.org.quartz.scheduler.instanceName" override="false">KSBScheduler</param> <param name="ksb.org.quartz.jobStore.isClustered" override="false">true</param> <param name="ksb.org.quartz.jobStore.tablePrefix" override="false">KRSB_QRTZ_</param>

If it becomes necessary to pass additional parameters to Quartz during rice startup, just add parameters in the rice-config.xml file prefixed with ksb.org.quartz.*

The parameter useQuartzDatabase MUST be set to true for Quartz clustering to work. (This is required because it uses the database to accomplish coordination between the different scheduler instances in the cluster.)

Ensure that all service bus endpoint URLs are unique on each machine: Make sure that each Rice server in the cluster has a unique serviceServletUrl parameter in the rice-config.xml configuration file.

One way to accomplish this is to pass a system parameter into the JVM that runs the Tomcat server that identifies the IP and port number of that particular Tomcat Server. For example:

-Dhttp.url=129.79.216.156:8806

You can then configure your serviceServletUrl in the rice-config.xml to use that IP and port number.

<param name="serviceServletUrl">http://${http.url}/${app.context.name}/remoting/</param>You could have different values for serviceServletUrl in the rice-config.xml on each machine in the cluster.

If you are using notes and attachments in workflow, then the attachment.dir.location parameter must point to a shared file system mount (one that is mounted by all machines in the cluster).

The specifics of setting up and configuring a shared file system location are part of how you set up your infrastructure environment. Those are beyond the scope of this Guide.

In general, to accomplish a load-balanced clustered environment, you must implement some type of load balancing technology with session affinity (i.e., it keeps the browser client associated with the specific machine in the cluster that it authenticated with). An example of a load balancing appliance-software is the open source product, Zeus.

Table of Contents

There are two different structural methods to run multiple instances of Rice within a single Tomcat instance. You can use either method:

Run a staging and a test environment. This requires a rebuild of the source code.

Run multiple instances of a production environment. This requires modification of the Tomcat WEB-INF/web.xml.

To show you how to set up a staging and a test environment within one Tomcat instance, this section presents the configuration recipe as though it were a Quick Start Best Practices section. This means that this section will be laid out using the Quick Start Best Practices section format and system directory structure. It presents a basic process, method, and guide to what you need to do to get a staging and test environment up within a single Tomcat instance. You could accomplish this functionality many different ways; these sections present one of those ways.

This describes how to set up the Rice instances of kualirice-stg and kualirice-tst instances pointing to the same database. However, you could set up two different databases, one for staging and one for testing. How you configure Rice for the scenario of a database for the “stg” instance and a separate database for the “tst” instance depends on how you want to set up Rice. That scenario is not documented here.

We are assuming that you performed all the installation steps above to compile the software from source and deploy the example kualirice.war file. This example begins with rebuilding the source to create a test and staging instance compilation.

You must compile the source code with a different environment variable. To add the environment variable, environment, to the WAR file’s WEB-INF/web.xml file, recompile the source code with this parameter:

ant -Drice.environment=some-environment-variable dist-war

To begin: Log in as the rice user.

Shut down your Tomcat server.

cd /usr/local/tomcat/bin ./shutdown.sh Using CATALINA_BASE: /usr/local/tomcat Using CATALINA_HOME: /usr/local/tomcat Using CATALINA_TMPDIR: /usr/local/tomcat/temp Using JRE_HOME: /usr/java/jdk1.6.0_16

Recompile your WAR files with the specific environment variables:

cd /opt/software/kuali/src/rice-release-1-0-2-br ant -Drice.environment=stg dist-war cd target/ cp -p kr-stg.war /usr/local/tomcat/webapps/kualirice-stg.war cd /opt/software/kuali/src/rice-release-1-0-2-br ant -Drice.environment=tst dist-war cp -p rice-tst.war /usr/local/tomcat/webapps/kualirice-tst.war

Adding an environment variable to the application config variable will setup Rice to point to the two different instances. To allow each instance to point to the same database, edit the rice-config.xml and modify the application.url to correctly point your Rice to load the correct setup:

<param name="application.url">http://yourlocalip:8080/kualirice-${environment}</param>Now start up your Tomcat server:

cd /usr/local/tomcat/bin ./startup.sh Using CATALINA_BASE: /usr/local/tomcat Using CATALINA_HOME: /usr/local/tomcat Using CATALINA_TMPDIR: /usr/local/tomcat/temp Using JRE_HOME: /usr/java/jdk1.6.0_16

If your Rice instances started up successfully, browse to the sites http://yourlocalip:8080/kualirice-stg and http://yourlocalip:8080/kualirice-tst. You should see the Rice sample application for each site.

Next, shut down your Tomcat server:

cd /usr/local/tomcat/bin ./shutdown.sh Using CATALINA_BASE: /usr/local/tomcat Using CATALINA_HOME: /usr/local/tomcat Using CATALINA_TMPDIR: /usr/local/tomcat/temp Using JRE_HOME: /usr/java/jdk1.6.0_16

To create specific configuration parameters for the specific instances of Rice, add this to the rice-config.xml.

<param name="config.location">/usr/local/rice/rice-config-${environment}.xml</param>Next, copy the rice-config.xml to both staging and test to enter instance-specific configuration into each of the resulting xml files:

cd /usr/local/rice cp -p rice-config.xml rice-config-stg.xml cp -p rice-config.xml rice-config-tst.xml

Remove anything from rice-config.xml that is specific to the stg or tst implementation. Put those specific stg or tst parameters in the rice-config-stg.xml or rice-config-tst.xml file, respectively.

Now start up your Tomcat server:

cd /usr/local/tomcat/bin ./startup.sh Using CATALINA_BASE: /usr/local/tomcat Using CATALINA_HOME: /usr/local/tomcat Using CATALINA_TMPDIR: /usr/local/tomcat/temp Using JRE_HOME: /usr/java/jdk1.6.0_16

If your Rice instances started up successfully, browse to the sites http://yourlocalip:8080/kualirice-stg and http://yourlocalip:8080/kualirice-tst. You should see the Rice sample application for each site.

As a best practice:

Put all common properties and settings across all Rice instances in the rice-config.xml.

Put instance-specific settings in rice-config-stg.xml and rice-config-tst.xml.

This describes how to set up two production Rice instances running side by side.

The configuration parameter ${environment} must be set to the text: prd

When the configuration parameter ${environment} is set to prd, the code:

Sends email to specified individuals

Turns off some of the Rice “back doors”

This assumes that you performed all the installation steps above to compile the software from source and deploy the example kualirice.war file. This example starts from rebuilding the source to accomplish a test and staging instance compilation.

Create a riceprd1 and riceprd2 database for the first production and second production instance, respectively.

Build the WAR file from the source code.

Unzip the WAR file in a temporary work directory.

Add an environment variable, prd1, to the WEB-INF/web.xml in the unzipped-war-file-directory.

Re-zip the WAR file into kualirice-prd1.war.

Copy kualirice-prd1.war to /usr/local/tomcat/webapps.

Change the environment variable from prd1 to prd2 in the WEB-INF/web.xml in the unzipped-war-file-directory.

Re-zip the WAR file into kualirice-prd2.war.

Copy kualirice-prd2.war to /usr/local/tomcat/webapps.

In /usr/local/rice, copy rice-config.xml to rice-config-prd1.xml.

In /usr/local/rice, copy rice-config.xml to rice-config-prd2.xml.

In rice-config.xml, remove any instance-specific parameters.

Modify rice-config-prd1.xml for instance-specific parameters.

Modify rice-config-prd2.xml for instance-specific parameters.

Start up Tomcat.

Here are the details:

Start by logging in as the rice user.

Shut down your Tomcat server.

cd /usr/local/tomcat/bin ./shutdown.sh Using CATALINA_BASE: /usr/local/tomcat Using CATALINA_HOME: /usr/local/tomcat Using CATALINA_TMPDIR: /usr/local/tomcat/temp Using JRE_HOME: /usr/java/jdk1.6.0_16

Set Up the ImpEx Process to Build the Database for the process to create the riceprd1 and riceprd2 databases.

Set your directory to the rice home directory:

cd ~ vi impex-build.properties

For the rice-prd1 database, modify this in the ImpEx file:

# # Uncomment these for a local MySQL database # import.torque.database = mysql import.torque.database.driver = com.mysql.jdbc.Driver import.torque.database.url = jdbc:mysql://kmysql:3306/riceprd1 import.torque.database.user=riceprd1 import.torque.database.schema=riceprd1 import.torque.database.password=kualirice

Save the file, change directory to the folder where the ImpEx build.xml is, and create the database:

cd /opt/software/kuali/db/trunk/impex ant create-schema ant satellite-update

You may receive this error because the ANT and SVN processes cannot write to a directory on the hard drive:

Buildfile: build.xml Warning: Reference torque-classpath has not been set at runtime, but was found during build file parsing, attempting to resolve. Future versions of Ant may support referencing ids defined in non-executed targets. satellite-update: Warning: Reference torque-classpath has not been set at runtime, but was found during build file parsing, attempting to resolve. Future versions of Ant may support referencing ids defined in non-executed targets. satellite-init: [echo] Running SVN update in /opt/software/kuali/devdb/rice-cfg-dbs [svn] <Update> started ... [svn] svn: '/opt/software/kuali/devdb/rice-cfg-dbs' is not a working copy [svn] svn: Cannot read from '/opt/software/kuali/devdb/rice-cfg-dbs/.svn/format': /opt/software/kuali/devdb/rice-cfg-dbs/.svn/format (No such file or directory) [svn] <Update> failed ! BUILD FAILED /opt/software/kuali/db/trunk/impex/build.xml:825: Cannot update dir /opt/software/kuali/devdb/rice-cfg-dbs Total time: 3 secondsIf you received the error above, go to the window where the root user is logged in and execute this command:

rm -rf /opt/software/kuali/devdb/rice-cfg-dbs

Then return to where you have the rice user logged in and re-execute the command:

ant satellite-update

The creation of the Rice riceprd1 database should begin at this time.

For the rice-prd2 database, modify this in the ImpEx file:

# # Uncomment these for a local MySQL database # import.torque.database = mysql import.torque.database.driver = com.mysql.jdbc.Driver import.torque.database.url = jdbc:mysql://kmysql:3306/riceprd2 import.torque.database.user=riceprd2 import.torque.database.schema=riceprd2 import.torque.database.password=kualirice

Save the file, change directory to the folder where the ImpEx build.xml is, and create the database:

cd /opt/software/kuali/db/trunk/impex ant create-schema ant satellite-update

You may get this error because the ANT and SVN processes cannot write to a directory on the hard drive: